Project information

- Category: Research Paper

- Affiliation: Louisiana State University (LSU)

- Status: Presented at DFRWS EU 2025

- Project URL: https://www.sciencedirect.com/science/article/pii/S2666281725000113

ForensicLLM: A Local Large Language Model for Digital Forensics

Abstract

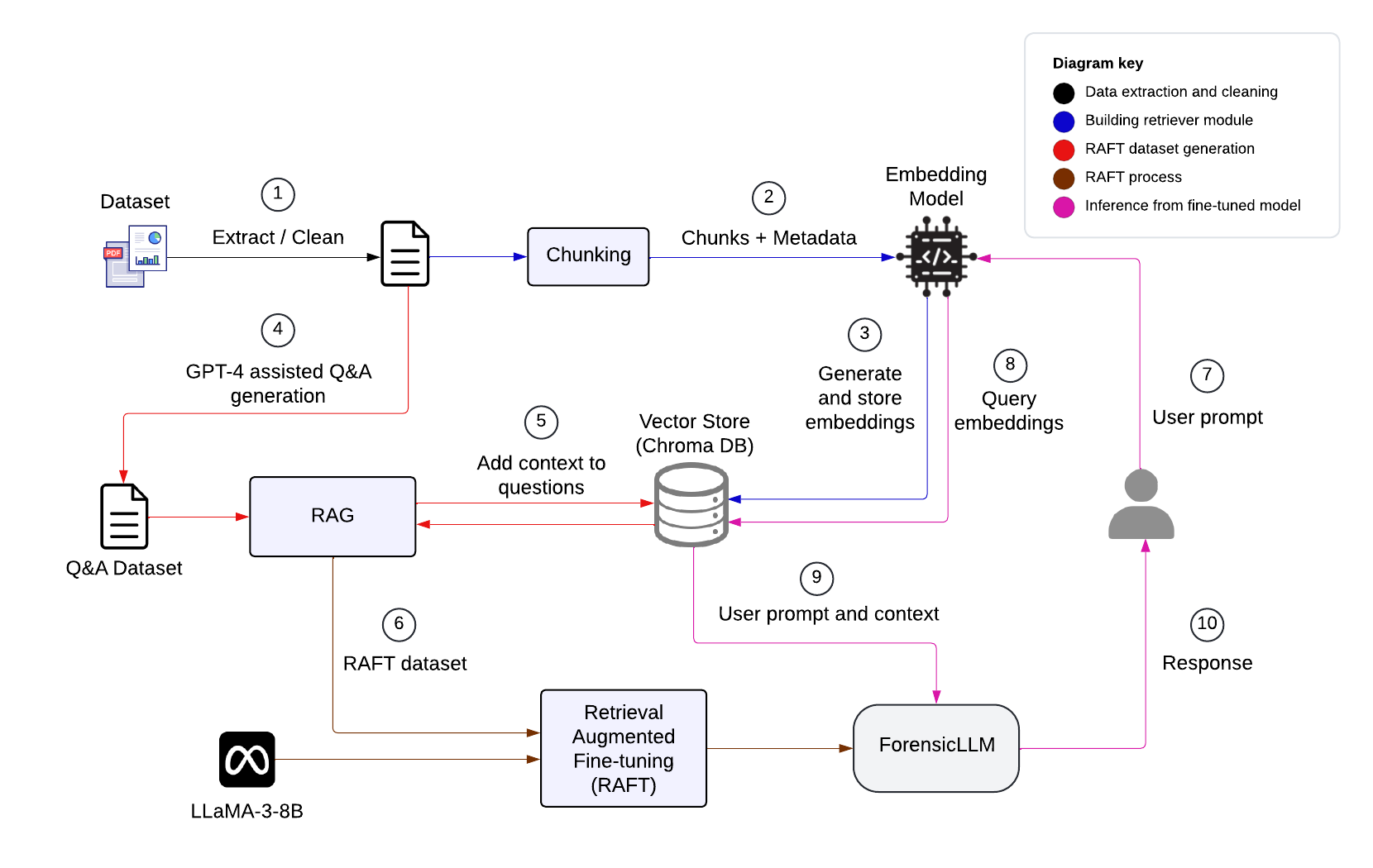

Large Language Models (LLMs) excel in diverse natural language tasks but often lack specialization for fields like digital forensics. Their reliance on cloud-based APIs or high-performance computers restricts use in resource-limited environments, and response hallucinations could compromise their applicability in forensic contexts. We introduce ForensicLLM, a 4-bit quantized LLaMA-3.1-8B model fine-tuned using Retrieval Augmented Fine-tuning (RAFT) on 6739 Q&A samples extracted from 1082 digital forensic research articles and curated digital artifacts. Evaluation on 2244 Q&A samples showed ForensicLLM outperformed the base LLaMA-3.1-8B model by 4.06%, 5.43%, and 15.79% on BERTScore F1, BGE-M3 cosine similarity, and G-Eval, respectively. Compared to LLaMA-3.1-8B + Retrieval Augmented Generation (RAG), it achieved improvements of 3.46%, 3.25%, and 4.61% on the same metrics. ForensicLLM accurately attributes sources 86.6% of the time, with 81.2% of the responses including both authors and title. Additionally, a user survey conducted with digital forensics professionals confirmed significant improvements of ForensicLLM and RAG model over the base model across multiple evaluation metrics. ForensicLLM showed strength in “correctness” and “relevance” metrics, while the RAG model was appreciated for providing more detailed responses. These advancements mark ForensicLLM as a transformative tool in digital forensics, elevating model performance and source attribution in critical investigative contexts.